When Prevention Becomes Performance: The NHS AI Alarm System

Monitoring Failures Instead of Fixing Them

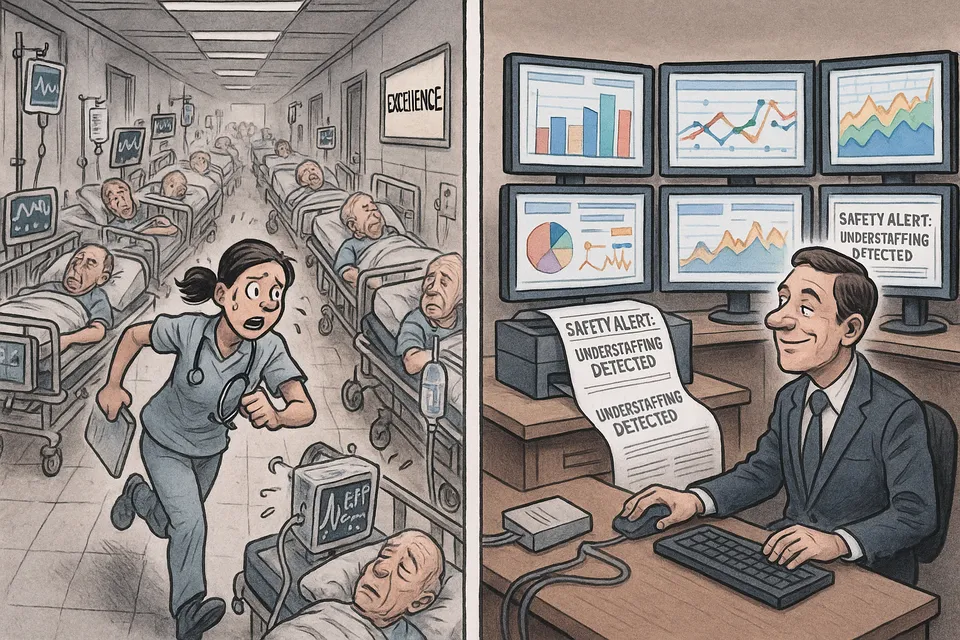

The NHS announced an AI system to detect patient safety scandals early, following murders by nurse Lucy Letby and 1,200 deaths at Mid Staffordshire Hospital. While positioned as preventive innovation, the technology monitors failures rather than addressing their root cause: chronic understaffing that leaves single nurses caring for 15+ patients. This represents classic institutional decline - sophisticated failure detection replacing basic competence in prevention.

Commentary Based On

The Independent

NHS to use AI ‘alarm system’ to prevent future patient safety scandals in world-first

What they claim: The NHS will deploy world-first AI technology to prevent patient safety scandals, moving from “analogue to digital” healthcare with early warning systems that will “save lives” and “catch unsafe care before it becomes a tragedy.”

What actually happened: After decades of preventable deaths that colleagues spotted but institutions ignored, the NHS has created an expensive monitoring system to efficiently document future scandals while leaving the fundamental cause unchanged - catastrophic understaffing that makes safe care impossib

This is not prevention. This is damage control dressed as innovation.

The Facts Behind the Fanfare

The AI system will monitor hospital data for patterns of deaths, serious injuries and abuse, with specialist Care Quality Commission teams investigating flagged concerns. The technology launches across NHS trusts from November, focusing initially on maternity care where families have been “gaslit” in their search for truth about preventable deaths.

Meanwhile, the fundamental driver of unsafe care remains unchanged: chronic understaffing that leaves single nurses responsible for 15 or more patients simultaneously. As the Royal College of Nursing noted, “by the time an inspection takes place, it could already be too late.”

The NHS has a documented history of expensive technological solutions that fail to address institutional dysfunction. Previous IT projects have cost billions while basic care standards deteriorated. Now, after decades of scandals, the same pattern emerges: deploy technology to monitor failures rather than prevent them.

Institutional Decay in Action

This announcement exemplifies how British institutions approach systemic failure. Rather than addressing root causes, they create monitoring systems to detect the symptoms of problems they refuse to solve. The NHS knows exactly why patient safety scandals occur: inadequate staffing, poor management oversight, and cultures that prioritise institutional reputation over patient welfare.

The Letby case revealed not technological blindness but institutional blindness. Colleagues raised concerns about suspicious deaths for months before action was taken. The patterns were visible to human observers; they simply lacked the institutional power to act or were actively discouraged from speaking out.

Creating AI systems to spot these patterns assumes the problem was insufficient data analysis rather than insufficient willingness to act on obvious warning signs. This fundamental misdiagnosis reveals how British institutions protect themselves while appearing to reform.

The Familiar Script

Streeting’s announcement follows a well-established script. First, a scandal emerges revealing systematic failures. Then, promises of reform and technological solutions. Finally, implementation of monitoring systems while underlying problems persist unchanged.

The Mid Staffordshire scandal led to the Francis Report and promises of cultural transformation. Yet the Letby murders occurred a decade later, demonstrating that monitoring and inspection regimes cannot substitute for adequate staffing and competent management.

The pattern extends beyond healthcare. British institutions consistently respond to failure by creating new oversight bodies, implementing new technologies, or establishing new inspection regimes while avoiding the difficult decisions required to address fundamental problems.

Digital Displacement

Positioning this AI system as moving the NHS “from analogue to digital” reveals another aspect of institutional decline: the belief that technological sophistication can compensate for operational inadequacy. This digital displacement allows politicians to appear forward-thinking while avoiding the expensive and politically difficult work of properly funding and staffing public services.

The most advanced monitoring system cannot compensate for having too few nurses to provide safe care. Yet presenting technological solutions allows politicians to claim progress without addressing the resource constraints that create the problems being monitored.

The Reality Check

Three uncomfortable truths emerge from this announcement. First, the NHS leadership knows patient safety scandals will continue occurring, hence the need for early detection systems. Second, they have decided that monitoring scandals is more politically viable than preventing them through adequate funding. Third, they expect the public to interpret surveillance technology as evidence of reform rather than admission of systemic failure.

The AI alarm system represents institutional adaptation to decline rather than prevention of it. British institutions are becoming sophisticated at detecting their own failures while remaining incapable of preventing them.

This technological solution will likely succeed in its actual purpose: providing political cover when the next scandal emerges. Officials will point to the monitoring systems in place while avoiding questions about why scandals continue occurring despite advance warning. The decline continues, but now it comes with real-time data analysis and world-first monitoring systems.

In a functioning healthcare system, patient safety would be maintained through adequate staffing and competent management. In Britain’s declining system, patient safety becomes a technological problem requiring algorithmic solutions. The NHS has moved from preventing harm to efficiently documenting it.

Commentary based on NHS to use AI ‘alarm system’ to prevent future patient safety scandals in world-first by Kate Devlin on The Independent.